Visual and Interactive Evaluation of Recommender Systems

When building modern real-world artificial intelligence systems, it is increasingly important to validate that the system works correctly. This is however not an easy task. Existing tools for machine learning practitioners are bad in providing insights into how AI models work for individual groups of end users. This is particularly important in detecting and mitigating AI bias of most widespread AI models: recommender systems. We have built and open sourced RepSys a tool for interactive evaluation of recommender systems to help the machine learning community to build better models.

Here we demonstrate what you can achieve with our tool. We have selected a dataset well known to the community, but you can easily replace it by your datasets and your models.

Recommending Books (GoodReads-10k)

Most popular machine learning tools such as Jupyter notebooks offer tools for basic exploratory data analysis, model training and evaluation. Typical example is presented in this notebook.

Analysing Training Data Using RepSys

Our library projects the rating (interaction) matrix into two dimensions revealing similarities among items (columns) or users (rows).

Then you can use attributes of items to explore e.g. where clusters of older books are located in the projection. The same way you can find clusters of users that enjoy reading old books.

When you select a cluster, you can look at most frequent values of attributes or distribution of numerical values.

Using this approach, you can get a really good understanding of the item latent space and how individual clusters of items are related and separated.

Analysing Models Using RepSys

When you do not see any flaws in user-item interactions, you can proceed and build some recommendation models. Typically the dataset is divided to the train and test sets and you can observe the metrics aggregated over all test users.

As you see, on this dataset, the best performing model in terms of recall is EASE followed by KNN. It is also useful to look at other criteria as well, for example APL that states for the average percentage of long tail items recommended by model. The higher value, the better. Bestseller model (POP) is recommending just popular items, and non-personalized Random model (RAND) suggests lots of bizzare long tail items. EASE is able to offer both high recall and APL which is challenging.

Single number such as an average or median is not enough to understand how models work for particular users and items. RepSys can visualize distribution of all metrics and relate it to specific clusters in the latent space. As the image shows, EASE can recommend particularly well to users in upper clusters (paranormal, recent fiction books), because they are better predictable.

What is even more interesting is to compare two different models (e.g. EASE and POP) and observe the relative difference.

Clearly, the Bestseller model (POP) is strongly biased towards a niche cluster of readers enjoying paranormal books. They are not popular enough to be recommended by this model. On the other hand, EASE can make these users very satisfied. In this way, you can tune hyperparameters of your models to get reasonable performance for all user groups.

For the GoodReads dataset, the optimal number of neighbors for the user based recommendation is around 50. We can investigate what will happen, if this hyperparameter is set too low (overfitted model) or too high (underfitted model). We have chosen the APL@10 metric telling us what is the percentage of long tail items in 10 most relevant items recommended by a model. Apparently, 1NN model recommends too many long-tail items to mainstream users in the center of the latent space who probably prefer more popular items. On the other hand, the 5000NN model is not capable of recommending long tail items at all. When compared to 50NN, the error (bias) of this model will be much higher on niche users in the surroundings. Note that green users have a similar proportion of long tail items recommended as by the model with K close to optimum so their recall is also good.

The error of overfitted model (1NN) is higher for almost all users — the model often recommends bizzare items to niche users and it does not recommend popular items much — harming its performance for mainstream users. Underfitted model (5000NN) correctly recommends popular content for the mainstream and the main source of its error is the bias towards niche clusters in the surroundings of the latent space.

Comparing Recommendations Using RepSys

The relative performance on a cluster of users or items is still just a number. Offline evaluation will always be biased. It is impossible to predict what would happen if a user was exposed to recommendations suggested by an evaluated model, which are not present in offline training data. For this reason, RepSys supports you in comparing recommendations of multiple models for individual users from the training set and for simulated users in the interactive mode. You can click on the suggested items and instantly observe how the recommendations change.

As you can see, after two interactions, the KNN model still recommends the bestselling Hunger Games book in the first position while EASE is able to suggest niche content that seems to be more relevant.

If you like your work, cite us, give us feedback. We are also looking for contributors to the RepSys library.

Next Articles

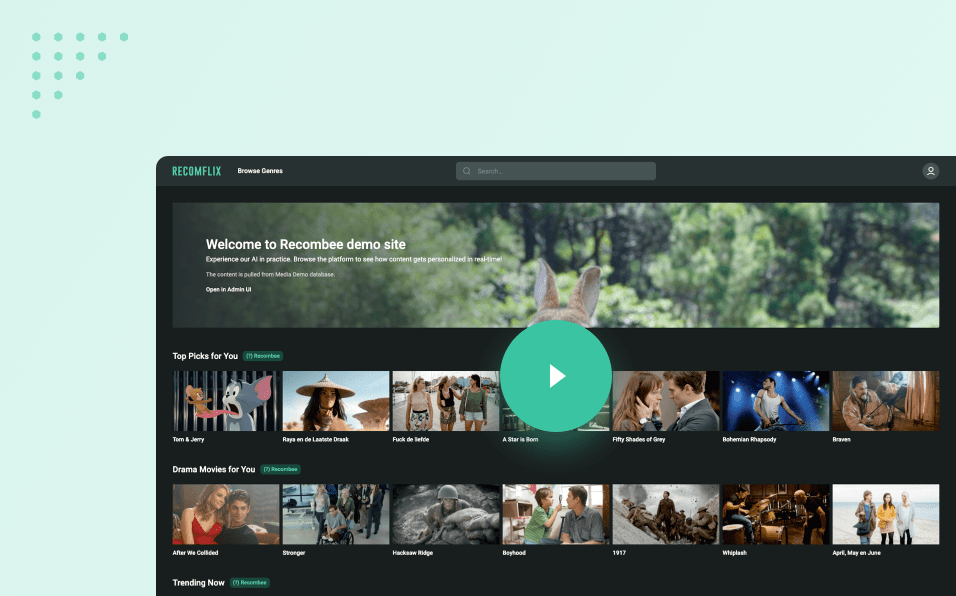

How We Are Using AI to Power Content Recommendations

In this article we walk you through how we are using the AI recommendation engine Recombee embedded in our headless CMS StoryBlok to drive content recommendations throughout our own website.

Real-Time Personalization of Content With AI-Powered Recommendations

Do you manage a publishing company, online gaming platform, or a streaming site with a content-heavy catalog and are thinking about how to improve the user experience?

AI-Powered Content Recommendations With a Headless CMS

Thanks to its API-first nature, it is quite straightforward to integrate your headless CMS with the most powerful AI-powered content recommendations available on the market. Luminary just did that with their own website, Kontent.ai and Recombee.